Track notebooks & scripts¶

This guide explains how to use track() & finish() to track notebook & scripts along with their inputs and outputs.

For tracking data lineage in pipelines, see Pipelines – workflow managers.

# !pip install 'lamindb[jupyter]'

!lamin init --storage ./test-track

Show code cell output

→ connected lamindb: testuser1/test-track

Track data lineage¶

Call track() to register a data transformation and start tracking inputs & outputs of a run. You will find your notebook or script in the Transform registry along with pipelines & functions. Run stores executions.

import lamindb as ln

# --> `ln.track()` generates a uid for your code

# --> `ln.track(uid)` initiates a tracked run

ln.track("9priar0hoE5u0000")

# your code

ln.finish() # mark run as finished, save execution report, source code & environment

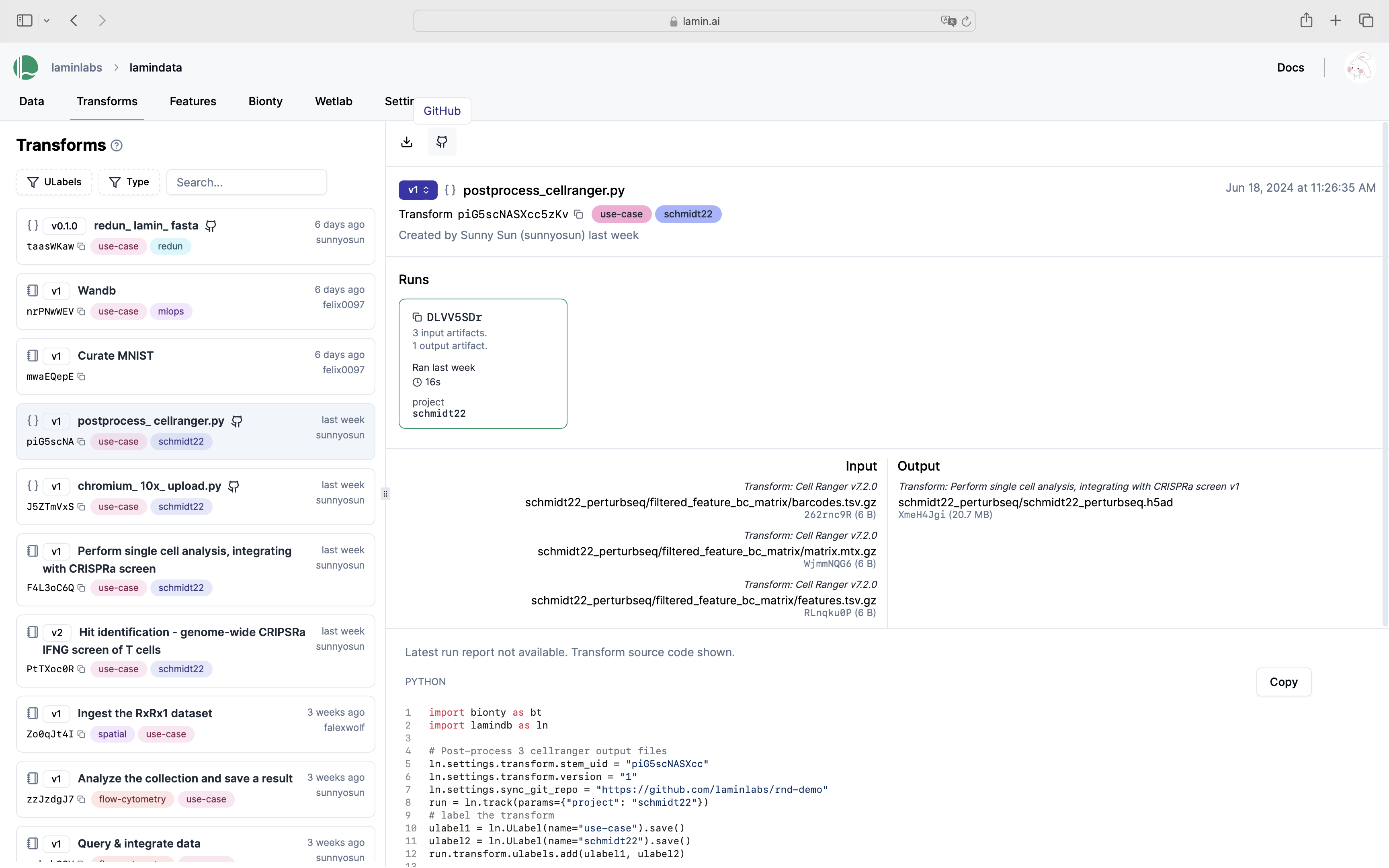

Below is how a notebook with run report looks on the hub.

Query & load a notebook or script¶

In the API, filter Transform to obtain a transform record:

transform = ln.Transform.get(name="Track notebooks & scripts")

transform.source_code # source code

transform.latest_run.report # report of latest run

transform.latest_run.environment # environment of latest run

transform.runs # all runs

On the hub, search or filter the transform page and then load a script or notebook on the CLI. For example,

lamin load https://lamin.ai/laminlabs/lamindata/transform/13VINnFk89PE0004

Sync scripts with GitHub¶

To sync with your git commit, add the following line to your script:

ln.settings.sync_git_repo = <YOUR-GIT-REPO-URL>

import lamindb as ln

ln.settings.sync_git_repo = "https://github.com/..."

ln.track()

# your code

ln.finish()

You’ll now see the GitHub emoji clickable on the hub.

Track run parameters¶

LaminDB’s validation dialogue auto-generates code for run parameters. Here is an example:

import lamindb as ln

ln.Param(name="input_dir", dtype="str").save()

ln.Param(name="learning_rate", dtype="float").save()

ln.Param(name="preprocess_params", dtype="dict").save()

Show code cell output

→ connected lamindb: testuser1/test-track

/opt/hostedtoolcache/Python/3.12.7/x64/lib/python3.12/site-packages/anndata/_io/__init__.py:12: FutureWarning: Importing read_zarr from `anndata._io` is deprecated. Please use anndata.io instead.

warnings.warn(

Param(name='preprocess_params', dtype='dict', created_by_id=1, created_at=2024-11-13 13:00:22 UTC)

import argparse

import lamindb as ln

if __name__ == "__main__":

p = argparse.ArgumentParser()

p.add_argument("--input-dir", type=str)

p.add_argument("--downsample", action="store_true")

p.add_argument("--learning-rate", type=float)

args = p.parse_args()

params = {

"input_dir": args.input_dir,

"learning_rate": args.learning_rate,

"preprocess_params": {

"downsample": args.downsample,

"normalization": "the_good_one",

},

}

ln.track("JjRF4mACd9m00001", params=params)

# your code

ln.finish()

Run the script.

!python scripts/run-track-with-params.py --input-dir ./mydataset --learning-rate 0.01 --downsample

Show code cell output

→ connected lamindb: testuser1/test-track

/opt/hostedtoolcache/Python/3.12.7/x64/lib/python3.12/site-packages/anndata/_io/__init__.py:12: FutureWarning: Importing read_zarr from `anndata._io` is deprecated. Please use anndata.io instead.

warnings.warn(

→ created Transform('JjRF4mAC'), started new Run('ioTNimxU') at 2024-11-13 13:00:26 UTC

→ params: input_dir='./mydataset' learning_rate='0.01' preprocess_params='{'downsample': True, 'normalization': 'the_good_one'}'

→ finished Run('ioTNimxU') after 0h 0m 1s at 2024-11-13 13:00:28 UTC

Query by run parameters¶

Query for all runs that match a certain parameters:

ln.Run.params.filter(learning_rate=0.01, input_dir="./mydataset", preprocess_params__downsample=True).df()

Show code cell output

| uid | started_at | finished_at | is_consecutive | reference | reference_type | transform_id | report_id | environment_id | parent_id | created_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||

| 1 | ioTNimxUd4IiM62dQCjA | 2024-11-13 13:00:26.991545+00:00 | 2024-11-13 13:00:28.700203+00:00 | True | None | None | 1 | None | 1 | None | 2024-11-13 13:00:26.991608+00:00 | 1 |

Note that:

preprocess_params__downsample=Truetraverses the dictionarypreprocess_paramsto find the key"downsample"and match it toTruenested keys like

"downsample"in a dictionary do not appear inParamand hence, do not get validated

Below is how you get the parameter values that were used for a given run.

run = ln.Run.params.filter(learning_rate=0.01).order_by("-started_at").first()

run.params.get_values()

Show code cell output

{'input_dir': './mydataset',

'learning_rate': 0.01,

'preprocess_params': {'downsample': True, 'normalization': 'the_good_one'}}

Or on the hub.

If you want to query all parameter values across all runs, use ParamValue.

ln.core.ParamValue.df(include=["param__name", "created_by__handle"])

Show code cell output

| param__name | created_by__handle | value | param_id | created_at | created_by_id | |

|---|---|---|---|---|---|---|

| id | ||||||

| 1 | input_dir | testuser1 | ./mydataset | 1 | 2024-11-13 13:00:27.010955+00:00 | 1 |

| 2 | learning_rate | testuser1 | 0.01 | 2 | 2024-11-13 13:00:27.011020+00:00 | 1 |

| 3 | preprocess_params | testuser1 | {'downsample': True, 'normalization': 'the_goo... | 3 | 2024-11-13 13:00:27.011074+00:00 | 1 |

Manage notebook templates¶

A notebook acts like a template upon using lamin load to load it. Consider you run:

lamin load https://lamin.ai/account/instance/transform/Akd7gx7Y9oVO0000

Upon running the returned notebook, you’ll automatically create a new version and be able to browse it via the version dropdown on the UI.

Additionally, you can:

label using

ULabel, e.g.,transform.ulabels.add(template_label)tag with an indicative

versionstring, e.g.,transform.version = "T1"; transform.save()

Saving a notebook as an artifact

Sometimes you might want to save a notebook as an artifact. This is how you can do it:

lamin save template1.ipynb --key templates/template1.ipynb --description "Template for analysis type 1" --registry artifact

Show code cell content

assert run.params.get_values() == {'input_dir': './mydataset', 'learning_rate': 0.01, 'preprocess_params': {'downsample': True, 'normalization': 'the_good_one'}}

# clean up test instance

!rm -r ./test-track

!lamin delete --force test-track

• deleting instance testuser1/test-track